Example of private VLAN isolation across Virtual and Physical servers using ESX/dvSwitch and HP Networking Comware switches

The target was simple, we have an internal cloud datacenter at work that provides users and customers both virtual machines and physical machines. Each machine has to network interface cards (NICs), one is in control of the user/customer using SDN layer, the secondary NIC is for our support to monitor and help troubleshoot these machines when needed. This second NIC will be our target today. In the past we used per-user or per-customer firewall separations that was configuration intensive nightmare, but was reliable. However since we learned private VLANs are now supported by vmWares Distributed vSwitch (dvSwitch), we immediately tried to make it cooperate with private VLANs on physical switches. And since it worked like a charm, let me share with you a quick lab example. But theory first!

Contents

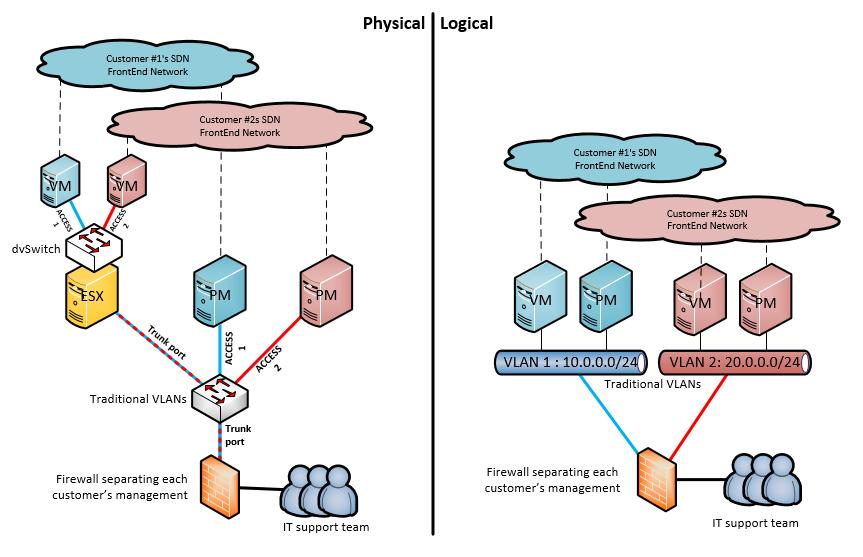

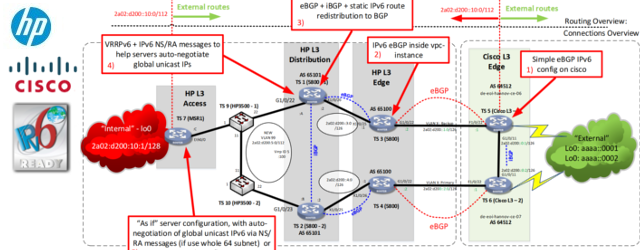

Theory of separating management rail between different customers with and without private VLANs

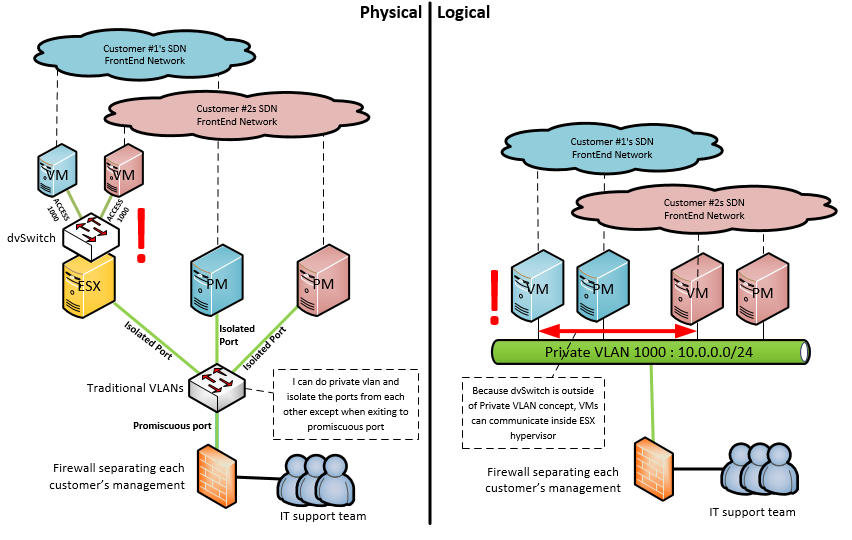

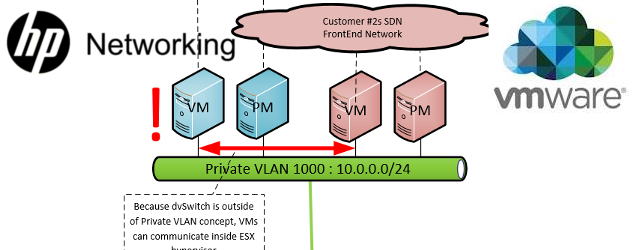

Fortunately Private VLANs arrived for most major vendors and promissed the ability to have one giant subnet and separate every host from each other on L2 using some basic principle of declaring ports as either promiscuous (can talk to any other port type), community (can talk to ports of the same community or promiscuous) and isolated (can really talk to only the promiscuous ports). For our need, we can simply declare the firewall port to be promiscous (so it can accept all traffic) and all other ports as isolated (so they can only talk to the firewall and not each other) and this would be a clear case of private VLANs. HOWEVER if only the network was full of physical servers only on any network hardware vendor, be it Cisco, Juniper or HPN. But what happens when virtual machines enter this scenario? Well, because hypervisors use internal software switches, the VMs might leak communication to each other since private vlans are only enforcing separation on physical links. Look at this scenario with VM behind hypervisor:

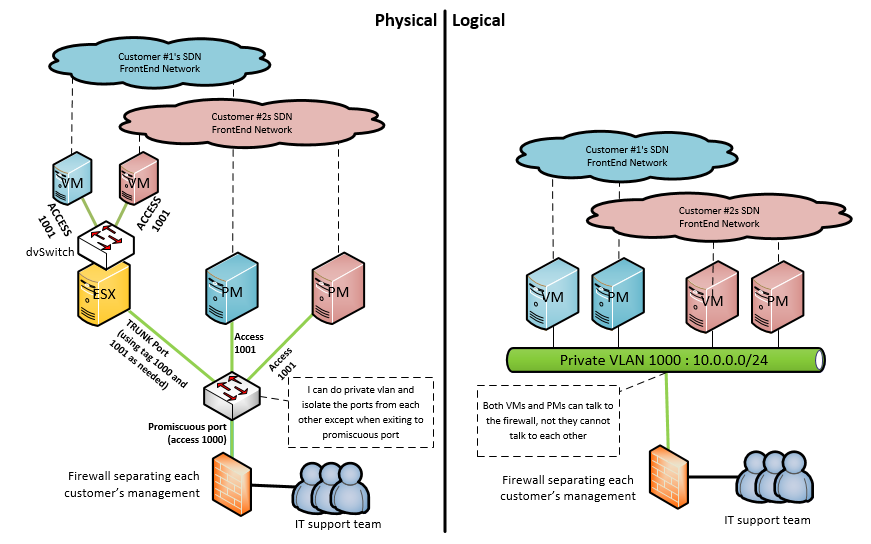

So what can we do here to solve this between VMs and PMs ? Simple, teach the virtual switches the private VLANs principle of using dot1q tags on trunks. You see, the private VLAN standard declared a way how to do private vlan in multi-switch topology by using primary (for promiscous ports) and secondary VLAN tags (community/isolated) and marking packets based on these tags based on source port type whenever the switch needed to push the packet via a trunk. There is a nice explanation on packetlife.net here of this, so I will jump right at applying this to virtual switches.

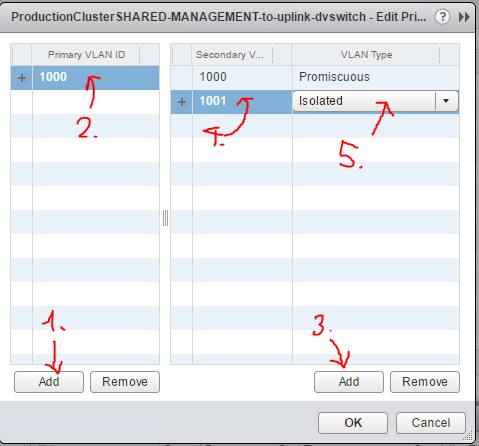

First, lets create in our lab two VLAN tag IDs, I will use VLAN 1000 as primary and VLAN 1001 as secondary.

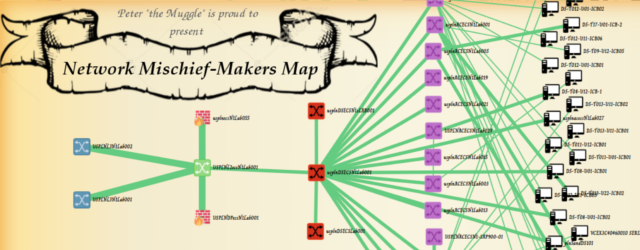

This means we can create a single shared subnet for management of all customer systems, both VMs and PMs, but we need to configure private VLANs on all physical switches, all virtual switches and use the same VLAN tages for primary and secondary VLANs. Our target here will be something like this:

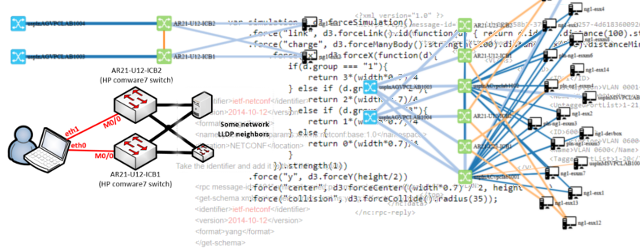

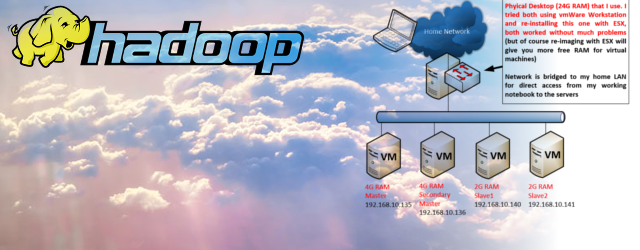

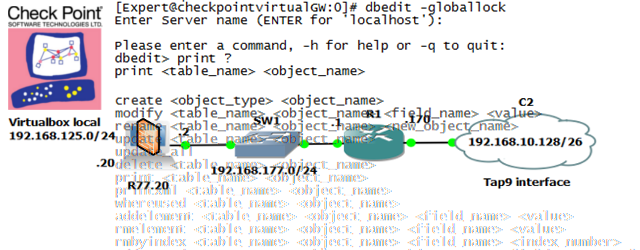

LAB Topology

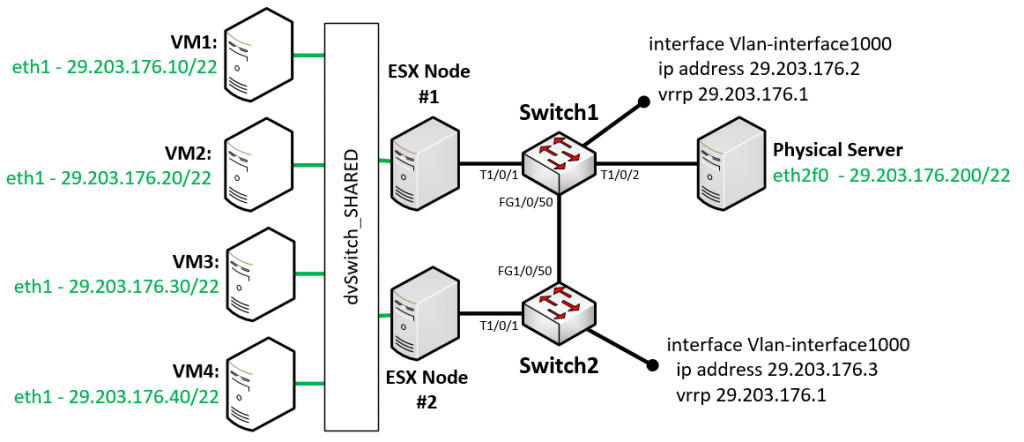

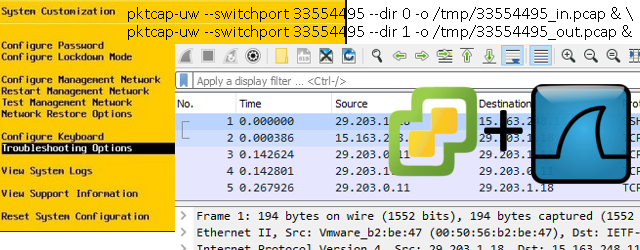

Now let’s get practical, this was my lab setup below on picture. Target is to achieve complete isolation of all VMs and PMs on the network, while at the same time allow all these systems to use default gateway, which was a VRRP IP on interface vlan on the switches.

I was using ESX hosts version 6.0 and HPN 5940 series switches with Comware 7 – R2508 version.

Configuring HPN switches for Private VLANs

Ok, lets configure the HPN’s 5940 first, it all starts with the private VLAN definition:

#Create primary privte vlan vlan 1000 private-vlan primary name Management Private VLAN quit #Create secondary vlan and define it as "isolated" type vlan 1001 # THESE TWO COMMANDS BECAUSE VLAN 1001 can talk to VLAN 1001 private-vlan isolated undo private-vlan community quit #Go back to primary vlan and tie it with secondary VLAN vlan 1000 private-vlan secondary 1001 quit

Next lets look at the interfaces towards ESX hosts, this is easy, we simply define them as normal trunks! So if you already had these as trunks, there is nothing much else to do here and the TenGigabit 1/0/1 interface in my lab looks like this:

interface Ten-GigabitEthernet1/0/1 port link-mode bridge description link to ESX Nodes port link-type trunk port trunk permit vlan all

Following up with port towards the host, and this is interesting, because you need to configure your port like this:

interface Ten-GigabitEthernet1/0/2 port link-mode bridge description link to physical server to isolate port link-type access port access vlan 1001 port private-vlan host

But the funny part is, that the private-vlan host command at the end is actually a macro, that will extend itself into several hybrid

interface Ten-GigabitEthernet1/0/2 port link-mode bridge port link-type hybrid undo port hybrid vlan 1 port hybrid vlan 1000 to 1001 untagged port hybrid pvid vlan 1001 port private-vlan host

And the grand finale, creating a promiscuous gateway interface is usually done on a physical port towards a router, but in my scenario (and actually our production scenarios) we need to create a gateway router as vlan interface locally on a nearby switch, luckily, HPN switches support this and the configuration look like this:

# Switch 1 interface Vlan-interface1000 private-vlan secondary 1001 ip address 29.203.176.2 255.255.252.0 vrrp vrid 1 virtual-ip 29.203.176.1 vrrp vrid 1 priority 110 # Switch 2 interface Vlan-interface1000 private-vlan secondary 1001 ip address 29.203.176.3 255.255.252.0 vrrp vrid 1 virtual-ip 29.203.176.1 vrrp vrid 1 priority 90

Well, that is, only a few extra commands on top of the very familiar vlan declarations and interface configurations, so this should not be any issue for you. And I would guess also for other vendors.

Configuring vCenter’s Distribuged vSwitch (dvSwitch) for Private VLANs

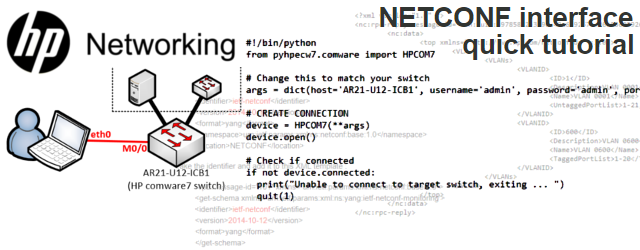

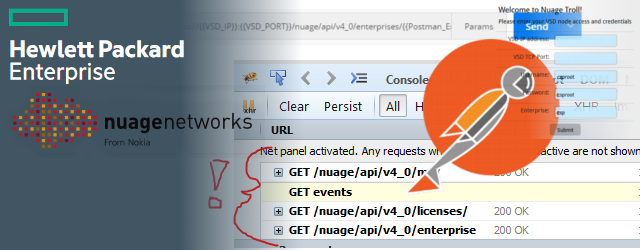

Moving on to the vmWare world, this part was actually what I didn’t know originally and needed to research (link below in external guides links). But it is very easy indeed as this is really just a few configuration steps on a centralized dvSwitch settings.

Note/Disclaimer: This guide expects that you have an ESX host that is using a Distributed vSwitch (dvSwitch) deployed across all your ESX nodes. In my lab I had two nodes called Node #1 and Node #2 (as visible in the lab topology picture above).

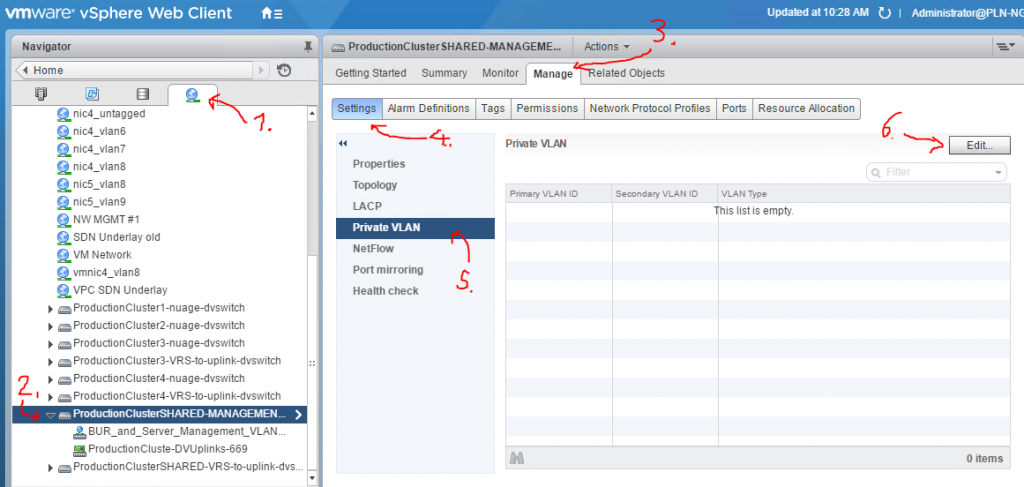

Step #1: Find the dvSwitch entity and configure the VLAN 1000 as primary and 1001 as secondary – isolated.

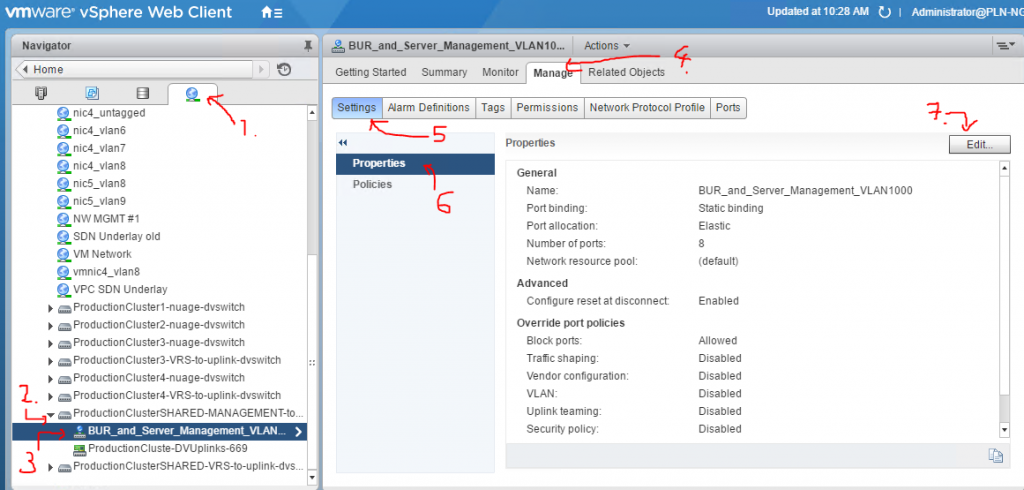

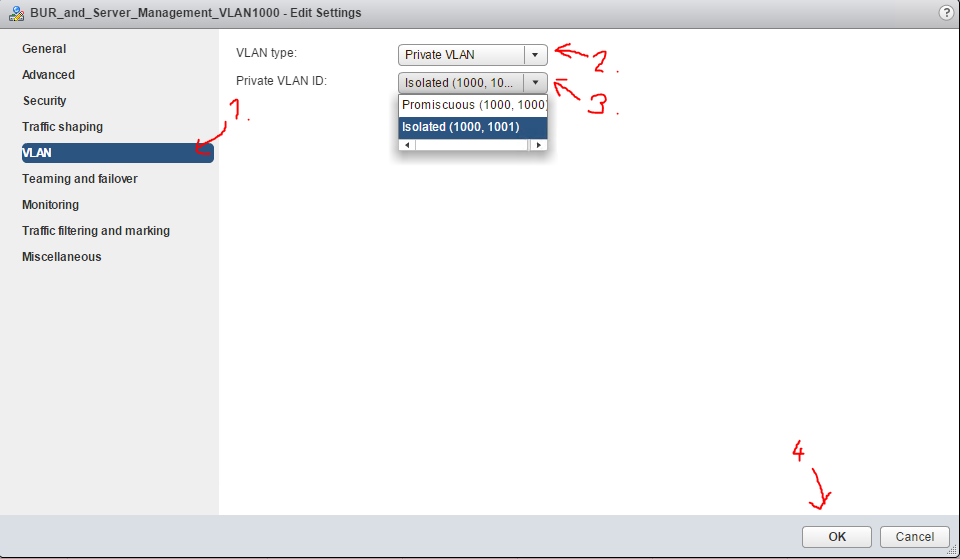

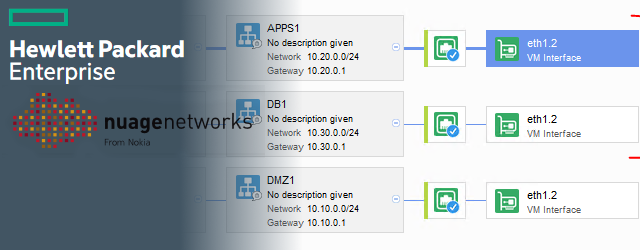

Step #2: In the configuration of the portgroup, where your VMs are connected to, assigned it to the isolated VLAN.

Results & Summary

As was our target all along, after this configuration, all the VMs and the physical server in the topology are not capable of accessing each other as they are members of the isolated secondary VLAN 1001, but they can access the promiscuous gateway interface (hosting VRRP gateway IP) because it is assigned to primary VLAN 1000.

See external guides:

- ESX – Private VLANs Configuration Knowledge Database

- HP Networking Private VLANs configuration PDF (for 5940 series switch)

- Private VLANs basic explanation – courtesy of Packet Life

Thank you for the explanation! In our case we don’t use vrrp so we want the traffic reach the router. There is surprisingly little information about this case in the posts in the internet as if everybody isolates the server from the internet too which is hardly ever the case. It would be simple if routers would accept the configuration of private vlans — just configure it there and add all related vlans into all trunks between the router and private vlan switch. But this seems more like a switch L2 feature and our Fortigate didn’t have any such configuration available.

So for that, I got from another post an additional configuration that was needed for the uplink port configuration (BAGG1 in our case):

port private-vlan 1000 trunk promiscuous

After this, the next switch behind the uplink port didn’t need VLAN 1001 anymore, the previous command put the VLANs’ traffic “suitably” into VLAN 1000.

Also, in our case we didn’t have any other physical servers (or other customers) so we didn’t have to take care of any ports of the switch, just tagged them all with all VLANs.